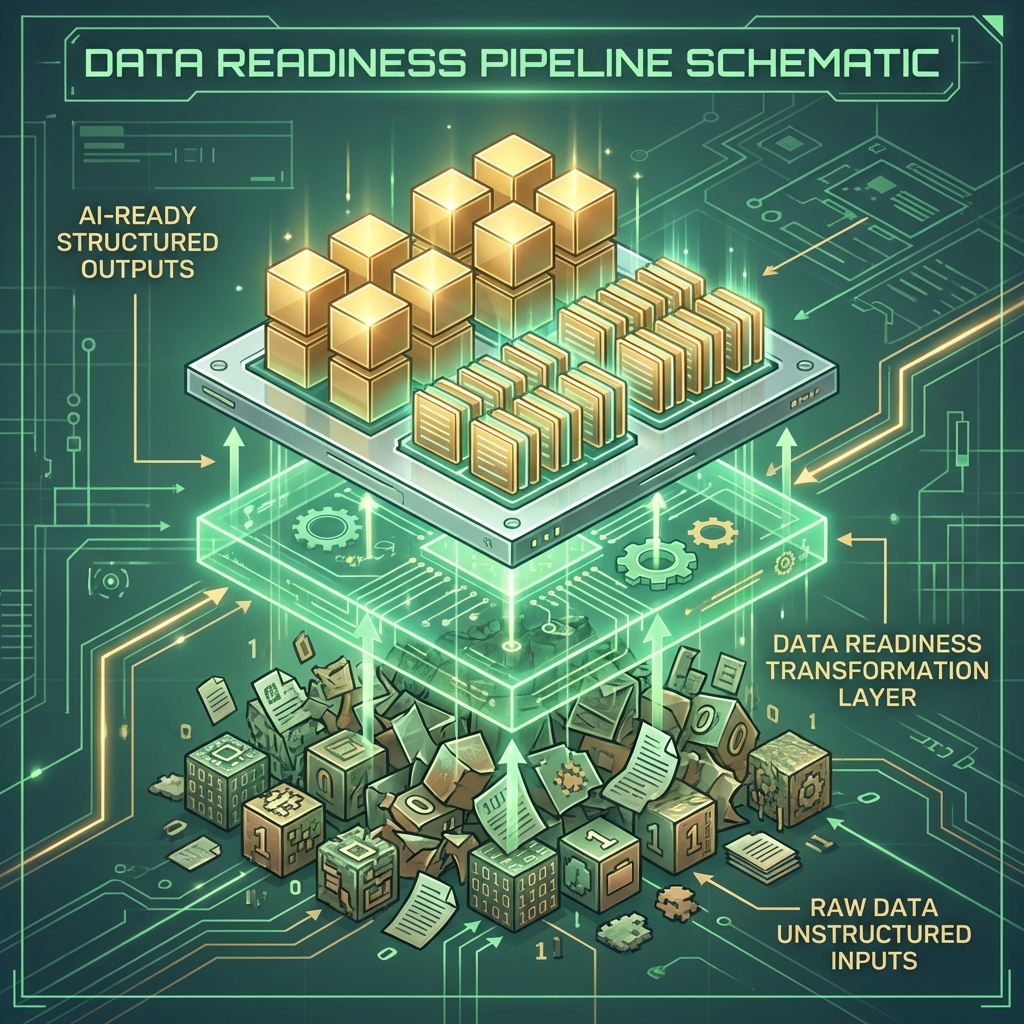

You can have the most advanced LLM in the world—GPT-5, Gemini Ultra, Claude Opus—but if you feed it garbage, it will hallucinate garbage. In 2026, Data Readiness is the single biggest predictor of whether an AI project launches or crashes. The "Model Wars" are over; the "Data Wars" have begun.

Why Most AI Pilots Crash on Takeoff

We see it constantly. A company wants an "AI Chatbot for Sales." They connect a RAG (Retrieval-Augmented Generation) system to their SharePoint and... disaster strikes.

The Symptoms of Bad Data:

- The AI quotes a pricing PDF from 2019 because it wasn't archived properly.

- It hallucinates features from a deprecated product line because the "End of Life" notice was an email, not a database field.

- It accesses sensitive HR documents it wasn't supposed to see because permissions were loose.

The model wasn't broken. The data infrastructure was built for humans, not machines. Humans use "common sense" to ignore the folder marked "OLD_DO_NOT_USE". Machines treat every available byte as absolute truth.

The 3 Pillars of AI Data Readiness

To get "AI Ready," you need to treat your data like a product, not a byproduct.

Hygiene & Structure (ETL)

The Problem: Unstructured data (PDFs, emails, Slack threads) is messy. Duplicate records confuse the AI (e.g., "John Smith" vs "J. Smith").

The Fix: We implement rigid ETL pipelines. We OCR old binaries, deduplicate records using fuzzy matching, and convert unstructured text into clean Markdown that LLMs love.

Access Control (RBAC)

The Problem: If you connect an AI to your Google Drive, it searches everything. Do you want the intern's chatbot to find the CEO's salary spreadsheet?

The Fix: We implement "Metadata Filtering." Every chunk of data is tagged with permissions (e.g., `group:hr`, `group:public`). The AI respects these tags rigidly.

Context & freshness

The Problem: Data rots. A sales figure from 2023 is factually "true" but strategically "false" today.

The Fix: We tag your knowledge base with "Time-to-Live" (TTL) markers. We prioritize recent documents in the vector search ranking so the AI always prefers the latest truth.

EkaivaKriti's "Data First" Methodology

We refuse to build fragile AI. We start every engagement with a Data Health Check. We fix your foundation before we pour the concrete.

This approach sometimes delays the "cool AI demo" by 2 weeks. But it prevents the project from dying 3 months later due to trust issues. We believe in Sustainable Intelligence.

Is Your Data Ready for AI?

Don't guess. Let our data engineers assess your readiness score. We'll crawl your data sources, identify risks, and give you a roadmap to get "AI Ready" in 30 days.

Get Your Data Health Check